AI-driven fraud is rapidly transforming Asia, creating urgent challenges for financial institutions to respond effectively.

At 9:23 a.m. in Hong Kong, a finance officer joined what appeared to be a routine video call with her regional leadership team. She recognized the faces and trusted the voices, responding to an urgency she did not question. The conversation flowed naturally, each exchange reinforcing the appearance of authenticity. Suddenly, an urgent request came through, demanding swift action that could not be postponed. The officer hesitated for a fleeting moment, her finger hovering over the confirmation button as the echoes of familiarity pulled her forward. Then she made the decision, unwittingly setting the stage for an irreversible action. Just twenty-two minutes later, the magnitude of the situation crystallized: USD 25 million had been transferred. None of the participants on the call was real.

This deepfake attack, now well-documented by Hong Kong police, marked a turning point: in 2025, fraud in Asia shifted from reactive to highly engineered. Scammers now use AI, automation, and cross-border coordination to operate at the scale of multinational enterprises. As Southeast Asia enters 2026, these attacks are accelerating faster than institutional adaptation.

Fraud is no longer merely evolving; it is scaling rapidly.

The Mounting Pressure Behind the Surge

This shift did not occur overnight. It resulted from three converging factors: the widespread accessibility of AI tools, scam syndicates adopting startup-like operations, and the rapid digitization of onboarding outpacing fraud defenses.

In 2025, consumer-grade AI applications could clone voices from brief audio clips or generate convincing fake videos from a single selfie. Singapore police reported a 300% increase in AI-assisted impersonation scams. INTERPOL intervened in operations trafficking workers into ‘fraud factories’ producing scripts, deepfake content, and malware. Indonesia’s OJK recorded a significant rise in digital fraud complaints as lenders and fintechs struggled to maintain effective verification.

All of the fraud cases in 2025 has been reshaping how we see and prevent frauds in the upcoming year. It’s not an easy task to avoid it, since AI and its creation are rapidly evolving and it takes vigilant filtering to distinguish which one is real, and otherwise. However, as a global data solutions company, which also utilizing AI to simplify processes, below are what we see the type of frauds in the upcoming year and actions we can take upon.

From PowerCred’s standpoint, which we have been working closely with financial institutions, digital platforms, and onboarding teams across Southeast Asia — these incidents weren’t isolated red flags. They were early signals of a systemic shift. Throughout 2025, we saw fraud attempts grow in sophistication, coordination, and speed. We also witnessed how quickly attackers adapted to new controls, often faster than traditional verification tools could respond.

Below are the major fraud frontiers that will define the region next year, and what institutions must prepare for.

The Five Fraud Frontiers Reshaping 2026

1. Deepfake Fraud Becomes Operational, Not Experimental

The Hong Kong case was not an isolated incident; it served as a blueprint. Deepfake voice calls from ‘CEOs,’ manipulated identity videos, and AI-generated proof-of-income clips have increased across Singapore, Malaysia, and Indonesia. Regulators warn that in 2026, deepfakes will target KYC processes, internal bank approvals, vendor payments, and insurance claims.

A selfie is no longer sufficient proof of identity.

2. Ghost Borrowers: AI-Crafted Synthetic Identities as Economic Weapons

Malaysia and the Philippines reported an increase in applicants with partially fabricated identity footprints. These ‘ghost borrowers,’ with AI-generated tax records and payslips, often passed manual reviews. Some lenders acknowledged they could not distinguish real borrowers from synthetic ones.

The next trend will be fully synthetic companies with fabricated financial histories.

3. Account Takeover Evolves Into Full Automation

Incidents involving Indonesian APK malware, Philippine SIM swaps, and full-balance drains in Singapore indicate that account takeover attacks now operate at scale. Bots collect credentials, malware captures OTPs, and voicebots impersonate bank agents. Victims are often unaware until it is too late.

Soon, account takeover will resemble remote control rather than traditional theft.

4. Cross-Border Fraud Networks Merge Into a Single Ecosystem

A scam originating in Myanmar can impact a lender in Jakarta, be cashed out through a wallet in Manila, and disappear into cryptocurrency within an hour. INTERPOL’s 2025 breakthroughs revealed only a small portion of a much larger network.

Fraud now operates globally, even as regulation remains local.

5. Authority Impersonation Reaches a Dangerous New Maturity

There are reports of fake MAS officers, replica Bank Negara websites, and synthetic OJK inspectors contacting businesses for ‘verification.’ In 2026, impersonation will extend beyond messages to include fake regulators who appear and act indistinguishably from real officials.

If trust fails, the entire system is at risk.

2026: When Trust Becomes the Hardest Currency

Each case involves individuals who believed what they saw or heard: a mother convinced her son needed help, a young worker whose ID was sold and duplicated across multiple platforms, and a small business owner harmed by synthetic borrowers.Both the emotional and economic costs are increasing.

Fraud in 2026 requires urgent action rather than optimism. Defenses must shift from static checks to real-time, multi-layered intelligence, including behavioral cues, document authenticity signals, metadata anomalies, continuous authentication, and AI-driven deepfake detection. Verification processes must evolve as quickly as deceptive tactics.

Institutions should assume that every onboarding process and communication channel is already targeted by adversarial AI. In 2026, Southeast Asia faces criminals who do not merely exploit vulnerabilities but manufacture convincing realities using artificial identities, documents, and authorities.

Only institutions that modernize now will remain ahead. Those who delay risk operating in crisis mode throughout 2026. If your organization is planning next year’s fraud and onboarding strategy, PowerCred offers purpose-built tools to address these emerging challenges.

PowerCred: Strengthening Digital Trust for the AI Era

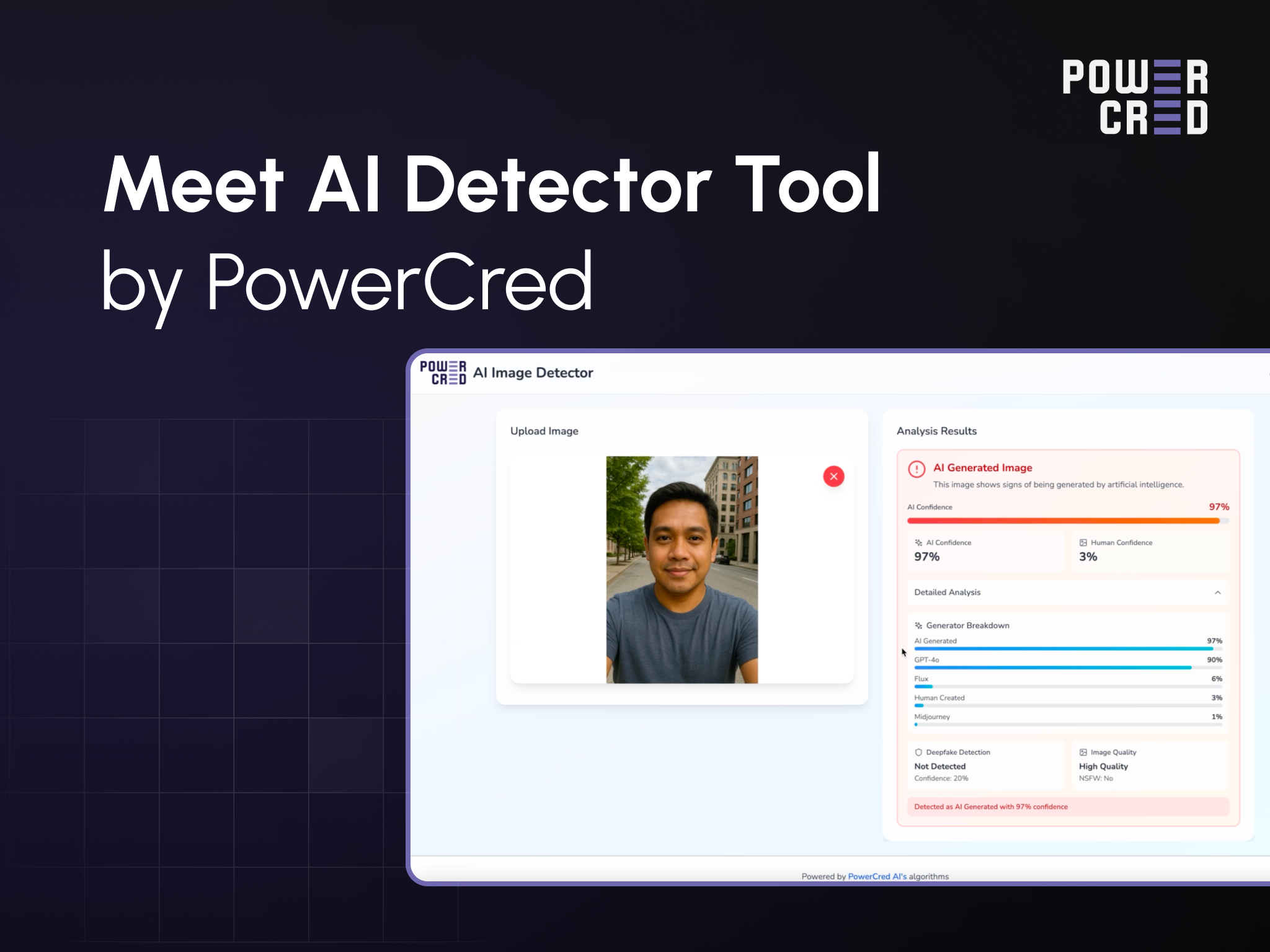

PowerCred helps financial institutions and digital platforms across Southeast Asia strengthen their defenses against this new wave of fraud. Our AI-powered tools identify deepfake content, synthetic identities, and tampered documents, while our verification and decisioning capabilities provide teams with the intelligence needed to assess risk quickly and accurately.

In a world where threats evolve monthly, PowerCred enables organizations to operate with confidence by protecting both customers and business integrity.

If your team is planning its fraud and onboarding strategy for 2026, now is the time to act.