AI-generated content has become so realistic that it is almost impossible to tell apart from what people create. Artificial Intelligence now powers everyday filters, creative media, voice replicas, and videos. This technology makes it easy to add things like virtual influencers, deepfakes, and forged documents to online identities, making it much harder to check if something is real.

Jobs that used to need special skills can now be done by anyone using basic tools. While this makes innovation easier, it also makes it easier for people to deceive others. For instance, AI can be used to create synthetic identities, which allow fraudsters to open fake bank accounts or apply for loans without raising suspicion. Similarly, AI-generated documents can be used to support false claims in insurance or financial services, causing significant challenges for verification processes.

When Innovation Enables Manipulation

AI-powered content creation enables bad actors to commit fraud with greater precision and on a larger scale than ever before. They can now create realistic onboarding faces, alter ID documents without leaving obvious signs, fake income statements, create false identities, and produce deepfake videos that are hard to spot. These threats have become everyday problems for risk and compliance teams in Southeast Asia and elsewhere.

The main problem isn’t a lack of tools, but that many organizations use outdated ones. Traditional verification methods can’t spot algorithmic manipulation. Manual checks often miss small changes. OCR systems can extract data, but they can’t verify whether a document is real. Even experts are finding it harder to tell real content from advanced AI fakes. The basics of trust, including originality, authenticity, and human review, are being shaken. To combat these challenges, organizations should immediately review their current verification processes and consider piloting modern AI-detection tools. These steps will provide risk managers with clear actions to enhance their defenses against AI-driven fraud.

The Structural Gap in Legacy Verification Systems

Old verification systems leave organizations open to new and more advanced AI-driven fraud. These outdated tools were designed to stop traditional fraud, but they can’t detect subtle, machine-generated fakes. As a result, businesses face greater risks to their operations, reputations, and compliance, underscoring the need for modern solutions.

Modern fraud is widespread, constantly evolving, and rapidly automated, making it too fast for legacy verification systems to keep up. Without updated tools, older processes can’t protect businesses from these new threats.

PowerCred’s AI Detector Platform as a Necessary Evolution

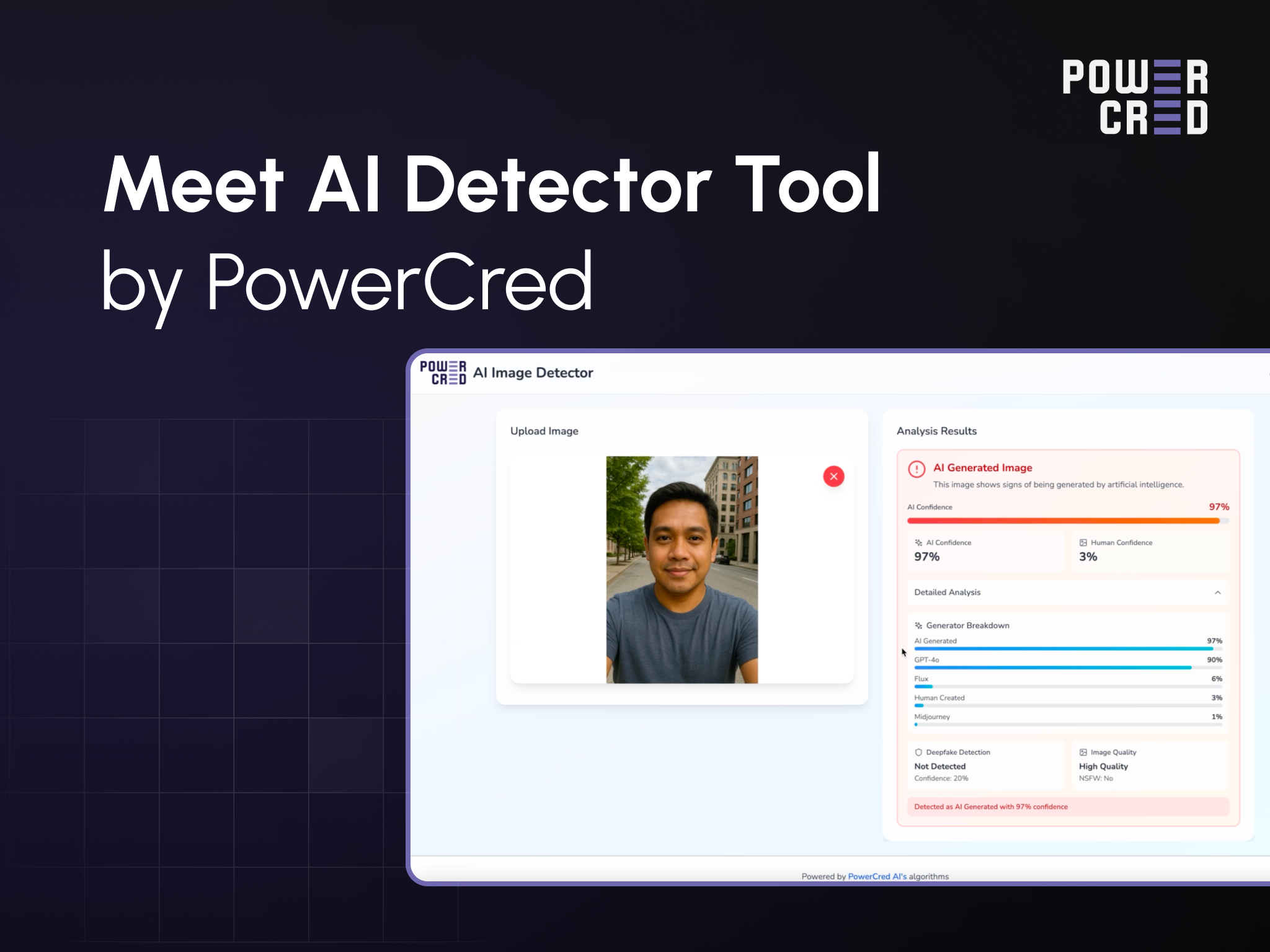

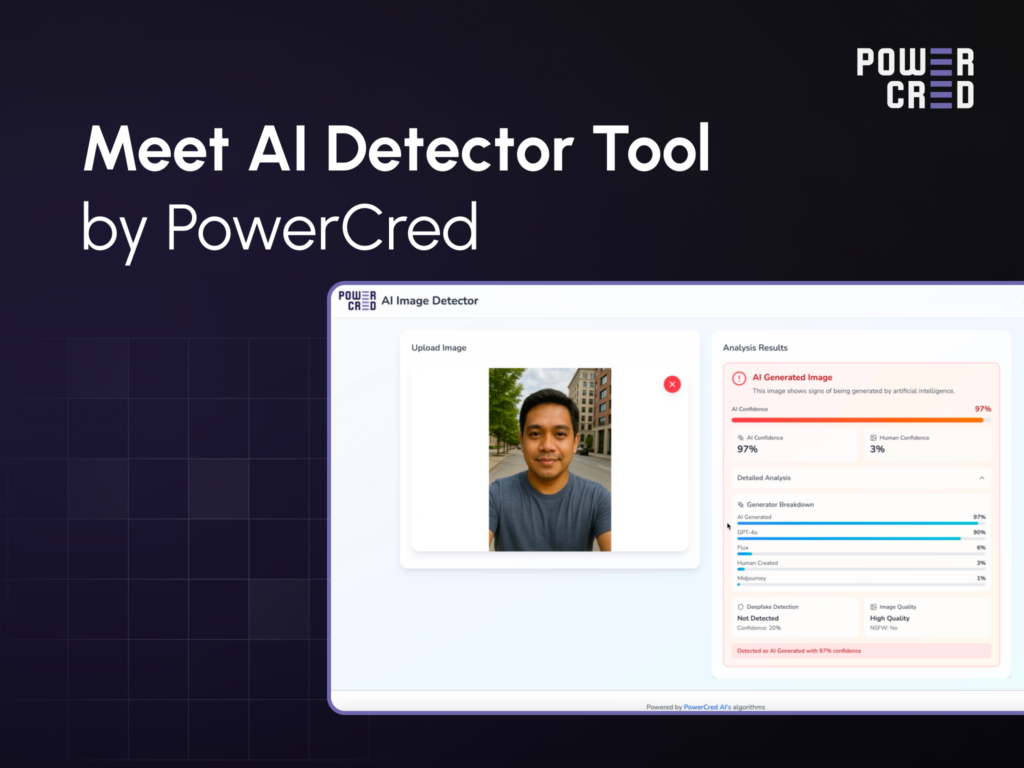

PowerCred’s AI Detector Platform represents a major step beyond basic AI content detection. Capable of analyzing diverse documents, images, and videos, the platform uses advanced technologies, including deep learning for image recognition and forensic methods, to reveal subtle AI-driven alterations. It also identifies the platform or method behind the manipulation, strengthening the reliability of its analyses.

This capability gives users a clear, reliable understanding of what is real and what has been artificially created. In a world where synthetic media is becoming increasingly common, PowerCred helps teams stay grounded, informed, and confident in the authenticity of the information they rely on.

PowerCred: The Powerful Fraud-Fighter Solution

PowerCred strengthens fraud defenses by integrating advanced AI detection into onboarding, verification, and risk assessment, enabling efficient, confident decision-making for companies facing modern fraud threats across Asia.

In a recent case study, a leading financial institution in Southeast Asia reduced its fraud rate by 30% in just six months after deploying PowerCred’s solution. The impact went beyond fraud reduction — they unlocked faster approvals, stronger customer trust, and a more scalable onboarding engine for 2026 and beyond.